Section: New Results

Collaboration

Participants : Michel Beaudouin-Lafon, Cédric Fleury, Wendy Mackay, Can Liu, Ignacio Avellino Martinez.

ExSitu is interested in exploring new ways to support collaborative interaction, especially within and across large interactive spaces such as those of the Digiscope network (http://digiscope.fr/ ). We started to investigate how to support telepresence among large, heterogeneous interactive spaces [24] , [25] . In particular, we studied how accurately a user can interpret deictic gestures in a video feed of a remote user [12] . These deictic gestures are important for conveying non-verbal cues for communication between remote users. We also created Webstrates [18] , an environment for exploring shareable dynamic media and the concept of information substrate.

Telepresence among large, heterogeneous interactive spaces – Large interactive spaces are powerful tools that can help scientific, industrial and business users to collaborate on large and complex data sets. In order to reach their full potential, these spaces must not only support local collaboration, but also collaboration with remote users, who may have significantly different display and interaction capabilities, such as a wall-display connected to an immersive CAVE.

We explain why supporting telepresence across large interactive spaces is critical for remote collaboration [24] . We have also started to explore how such asymmetric interaction capabilities provide interesting opportunities for new collaboration strategies in large interactive spaces [25] .

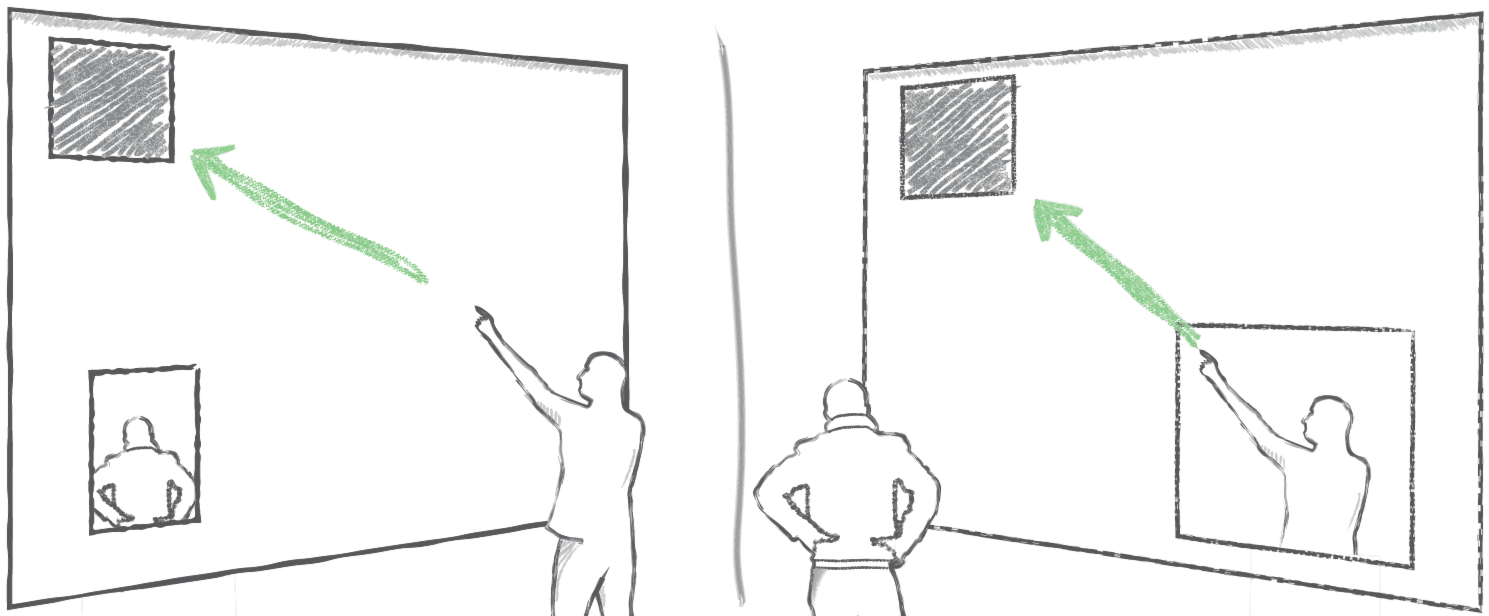

Accuracy of deictic gestures for telepresence – In the context of telepresence on large wall-sized displays, we investigated how accurately a user can interpret the video feed of a remote user showing a shared object on the display, by looking at it or by looking and pointing at it (Figure 6 ) [12] . We also analyzed how sensitive distance and angle errors are to the relative position between the remote viewer and the video feed. We showed that users can accurately determine the target, that eye gaze alone is more accurate than when combined with the hand, and that the relative position between the viewer and the video feed has little effect on accuracy. These findings can inform the design of future telepresence systems for wall-sized displays.

|

Webstrates – In collaboration with Université of Aarhus (Denmark) and Institut Mines Telecom, we created Webstrates [18] , a system inspired by Alan Kay’s early vision of interactive dynamic media. Webstrates is based on web technology: web pages served by the Webstrates server can be shared in real time among multiple users, on any web-enabled device. By using transclusion, a webstrate page can include other Webstrates. Webstrates can also include code, making them dynamic and interactive. A Webstrate that can act on another, transcluded Webstrate, is similar to an editor on a classical desktop environment. However the distinction between content and tools, documents and applications is blurred, e.g. content can be used as a tool, and tools can be shared like regular content. We implemented two case studies to illustrate Webstrates (Figure 7 ). We authored the article collaboratively, using functionally and visually different editors that we could personalize and extend at run-time. We also used Webstrates to orchestrate a presentation, using multiple devices to control the presentation, to let the audience participate and the session chair organize the session. We demonstrated the simplicity and generative power of Webstrates with three additional prototypes and evaluated them from a systems perspective. Webstrates runs in our WildOS middleware on the WILD and WILDER rooms, and is used for some of our projects on telepresence.

|